This page was generated from examples/single.ipynb.

Single-Order Spectrum¶

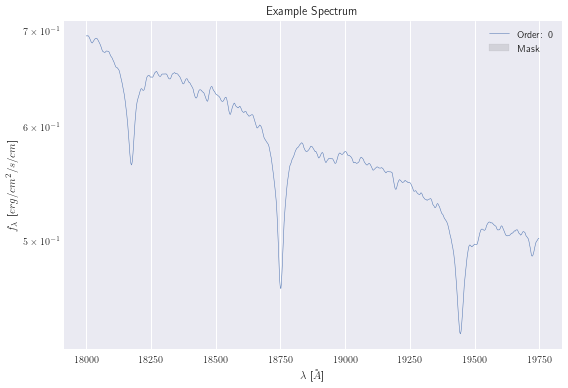

This will show how to fit a single-order spectrum using our previous setup on some ~mysterious~ IRTF SpeX data. The spectrum is available for download here.

Note: This documentation is not meant to be an exhaustive tour of Starfish’s features, but rather a simple example showing a workflow typical of fitting data.

Preprocessing¶

Normally, you would pre-process your data. This includes loading the fits files, separating out the wavelengths, fluxes, uncertainties, and any masks. In addition, you would need to convert your data into the same units as your emulator. In our case, the PHOENIX emulator uses \(A\) and \(erg/cm^2/s/cm\). For this example, though, I’ve already created a spectrum that you can load directly.

[1]:

%matplotlib inline

import matplotlib.pyplot as plt

plt.style.use("seaborn")

plt.rcParams["text.usetex"] = True

[2]:

from Starfish.spectrum import Spectrum

data = Spectrum.load("example_spec.hdf5")

data.plot();

Setting up the model¶

Now we can set up our initial model. We need, at minimum, an emulator, our data, and a set of the library grid parameters. Every extra keyword argument we add is added to our list of parameters. For more information on what parameters are available and what effect they have, see the SpectrumModel documentation.

Some of these parameters are based on guesses or pre-existing knowledge. In particular, if you want to fit log_scale, you should spend some time tuning it by eye, first. We also want our global_cov:log_amp to be reasonable, so pay attention to the \(\sigma\)-contours in the residuals plots, too.

There aren’t any previous in-depth works on this star, so we will start with some values based on the spectral type alone.

[3]:

from Starfish.models import SpectrumModel

model = SpectrumModel(

"F_SPEX_emu.hdf5",

data,

grid_params=[6800, 4.2, 0],

Av=0,

global_cov=dict(log_amp=38, log_ls=2),

)

model

[3]:

SpectrumModel

-------------

Data: Example Spectrum

Emulator: F_SPEX_emu

Log Likelihood: None

Parameters

Av: 0

T: 6800

Z: 0

global_cov:

log_amp: 38

log_ls: 2

logg: 4.2

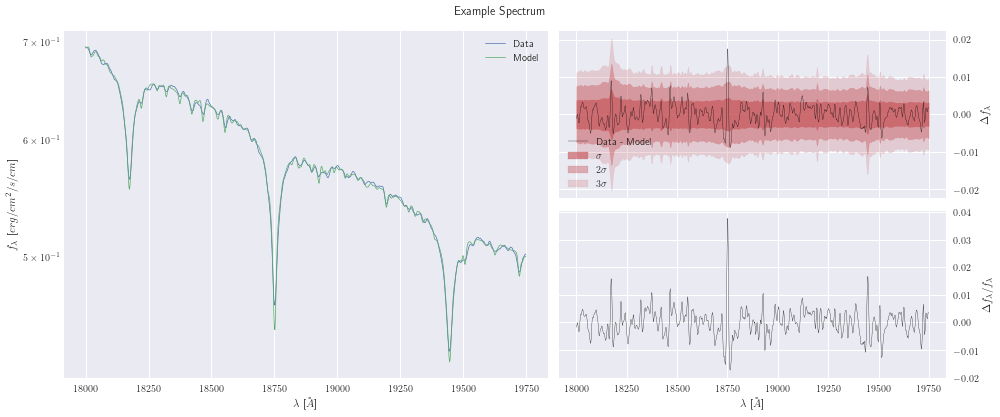

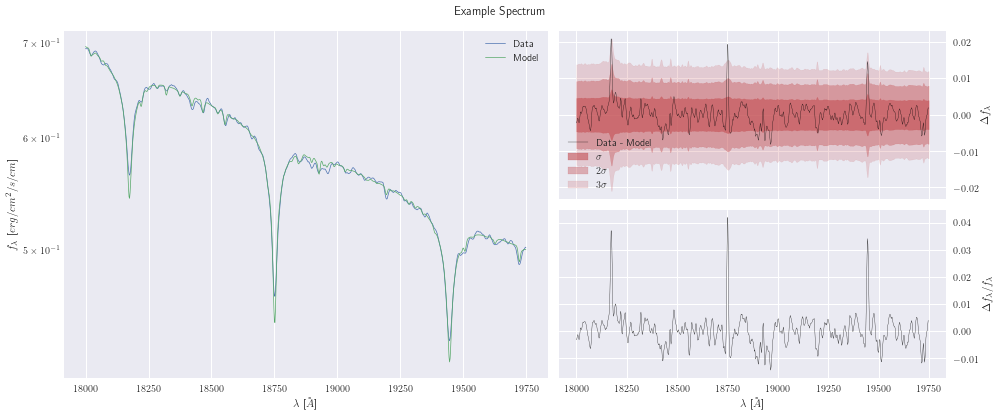

In this plot, we can see the data and model in the left pane, the absolute errors (residuals) along with the diagonal of the covariance matrix as \(\sigma\) contours in the top-right, and the relative errors (residuals / flux) in the bottom-right

[5]:

model.plot();

Numerical Optimization¶

Now lets do a maximum a posteriori (MAP) point estimate for our data.

Here we freeze logg here because the PHOENIX models’ response to logg compared to our data are relatively flat, so we fix the value using the freeze mechanics. This is equivalent to applying a \(\delta\)-function prior.

[6]:

model.freeze("logg")

model.labels # These are the fittable parameters

[6]:

('Av', 'T', 'Z', 'global_cov:log_amp', 'global_cov:log_ls')

Here we specify some priors using scipy.stats classes. If you have a custom distribution you want to use, create a class and make sure it has a logpdf member function.

[8]:

import scipy.stats as st

priors = {

"T": st.norm(6800, 100),

"Z": st.uniform(-0.5, 0.5),

"Av": st.halfnorm(0, 0.2),

"global_cov:log_amp": st.norm(38, 1),

"global_cov:log_ls": st.uniform(0, 10),

}

Using the above priors, we can do our MAP optimization using scipy.optimize.minimze, which is usefully baked into the train method of our model. This should give us a good starting point for our MCMC sampling later.

[9]:

%time model.train(priors)

CPU times: user 9min 32s, sys: 1min 37s, total: 11min 10s

Wall time: 1min 37s

[9]:

final_simplex: (array([[ 8.54783896e-12, 7.02369877e+03, -4.45788754e-04,

3.88334287e+01, 4.14479840e+00],

[ 1.48310582e-11, 7.02369876e+03, -4.45788836e-04,

3.88334288e+01, 4.14479845e+00],

[ 1.22696417e-11, 7.02369877e+03, -4.45788845e-04,

3.88334288e+01, 4.14479844e+00],

[ 1.00040208e-11, 7.02369885e+03, -4.45788664e-04,

3.88334286e+01, 4.14479836e+00],

[ 1.67318797e-11, 7.02369882e+03, -4.45788773e-04,

3.88334287e+01, 4.14479842e+00],

[ 1.32087844e-11, 7.02369883e+03, -4.45788591e-04,

3.88334285e+01, 4.14479835e+00]]), array([-5885.18256651, -5885.18256651, -5885.18256651, -5885.18256651,

-5885.18256651, -5885.18256651]))

fun: -5885.18256651297

message: 'Optimization terminated successfully.'

nfev: 771

nit: 453

status: 0

success: True

x: array([ 8.54783896e-12, 7.02369877e+03, -4.45788754e-04, 3.88334287e+01,

4.14479840e+00])

[11]:

model

[11]:

SpectrumModel

-------------

Data: Example Spectrum

Emulator: F_SPEX_emu

Log Likelihood: 5894.70076364305

Parameters

Av: 8.547838957698344e-12

T: 7023.698772270976

Z: -0.00044578875442483404

global_cov:

log_amp: 38.8334286761706

log_ls: 4.1447983961624875

Frozen Parameters

logg: 4.2

[13]:

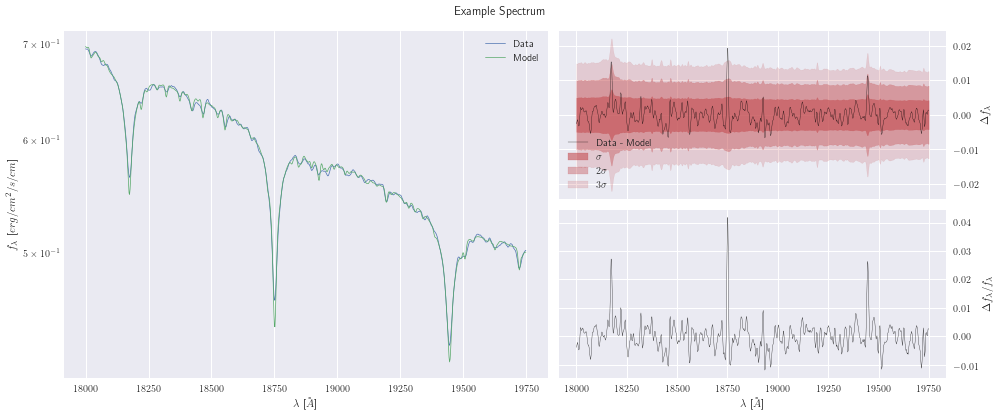

model.plot();

[14]:

model.save("example_MAP.toml")

MCMC Sampling¶

Now, we will sample from our model. Note the flexibility we provide with Starfish in order to allow sampler front-end that allows blackbox likelihood methods. In our case, we will continue with emcee, which provides an ensemble sampler. We are using pre-release of version 3.0. This document serves only as an example, and details about emcee’s usage should be sought after in its documentation.

For this basic example, I will freeze both the global and local covariance parameters, so we are only sampling over T, Z, and Av.

[15]:

import emcee

emcee.__version__

[15]:

'3.0.0'

[17]:

model.load("example_MAP.toml")

model.freeze("global_cov")

model.labels

[17]:

('Av', 'T', 'Z')

[19]:

import numpy as np

# Set our walkers and dimensionality

nwalkers = 50

ndim = len(model.labels)

# Initialize gaussian ball for starting point of walkers

scales = {"T": 1, "Av": 0.01, "Z": 0.01}

ball = np.random.randn(nwalkers, ndim)

for i, key in enumerate(model.labels):

ball[:, i] *= scales[key]

ball[:, i] += model[key]

[20]:

# our objective to maximize

def log_prob(P, priors):

model.set_param_vector(P)

return model.log_likelihood(priors)

# Set up our backend and sampler

backend = emcee.backends.HDFBackend("example_chain.hdf5")

backend.reset(nwalkers, ndim)

sampler = emcee.EnsembleSampler(

nwalkers, ndim, log_prob, args=(priors,), backend=backend

)

here we start our sampler, and following this example we check every 10 steps for convergence, with a max burn-in of 1000 samples.

Warning: This process can take a long time to finish. In cases with high resolution spectra or fully evaluating each nuisance covariance parameter, we recommend running on a remote machine. A setup I recommend is a remote jupyter server, so you don’t have to create any scripts and can keeping working in notebooks.

[21]:

max_n = 1000

# We'll track how the average autocorrelation time estimate changes

index = 0

autocorr = np.empty(max_n)

# This will be useful to testing convergence

old_tau = np.inf

# Now we'll sample for up to max_n steps

for sample in sampler.sample(ball, iterations=max_n, progress=True):

# Only check convergence every 100 steps

if sampler.iteration % 10:

continue

# Compute the autocorrelation time so far

# Using tol=0 means that we'll always get an estimate even

# if it isn't trustworthy

tau = sampler.get_autocorr_time(tol=0)

autocorr[index] = np.mean(tau)

index += 1

# skip math if it's just going to yell at us

if not np.isfinite(tau).all():

continue

# Check convergence

converged = np.all(tau * 10 < sampler.iteration)

converged &= np.all(np.abs(old_tau - tau) / tau < 0.01)

if converged:

print(f"Converged at sample {sampler.iteration}")

break

old_tau = tau

/Users/miles/.pyenv/versions/3.7.4/Python.framework/Versions/3.7/lib/python3.7/site-packages/emcee/ensemble.py:258: RuntimeWarning: Initial state is not linearly independent and it will not allow a full exploration of parameter space

category=RuntimeWarning,

0%| | 0/1000 [00:00<?, ?it/s]/Users/miles/.pyenv/versions/3.7.4/Python.framework/Versions/3.7/lib/python3.7/site-packages/emcee/moves/red_blue.py:97: RuntimeWarning: invalid value encountered in double_scalars

lnpdiff = f + nlp - state.log_prob[j]

1%| | 10/1000 [00:22<40:51, 2.48s/it]/Users/miles/.pyenv/versions/3.7.4/Python.framework/Versions/3.7/lib/python3.7/site-packages/emcee/autocorr.py:38: RuntimeWarning: invalid value encountered in true_divide

acf /= acf[0]

/Users/miles/.pyenv/versions/3.7.4/Python.framework/Versions/3.7/lib/python3.7/site-packages/emcee/autocorr.py:43: RuntimeWarning: invalid value encountered in less

m = np.arange(len(taus)) < c * taus

/Users/miles/.pyenv/versions/3.7.4/Python.framework/Versions/3.7/lib/python3.7/site-packages/emcee/autocorr.py:101: RuntimeWarning: invalid value encountered in greater

flag = tol * tau_est > n_t

42%|████▏ | 420/1000 [35:41<49:16, 5.10s/it]

Converged at sample 420

After our model has converged, let’s take a few extra samples to make sure we have clean chains. Remember, we have 50 walkers, so 100 samples ends up becoming 5000 across each chain!

[22]:

sampler.run_mcmc(backend.get_last_sample(), 100, progress=True);

100%|██████████| 100/100 [08:54<00:00, 5.34s/it]

MCMC Chain Analysis¶

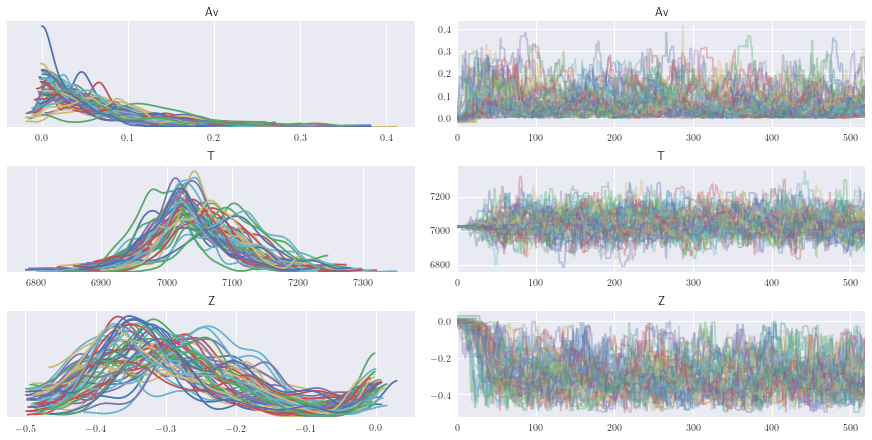

Chain analysis is a very broad topic that is mostly out of the scope of this example. For our analysis, we like using ArviZ with a simple corner plot as well.

[23]:

import arviz as az

import corner

print(az.__version__, corner.__version__)

0.6.0 1.0.3

[24]:

reader = emcee.backends.HDFBackend("example_chain.hdf5")

full_data = az.from_emcee(reader, var_names=model.labels)

[25]:

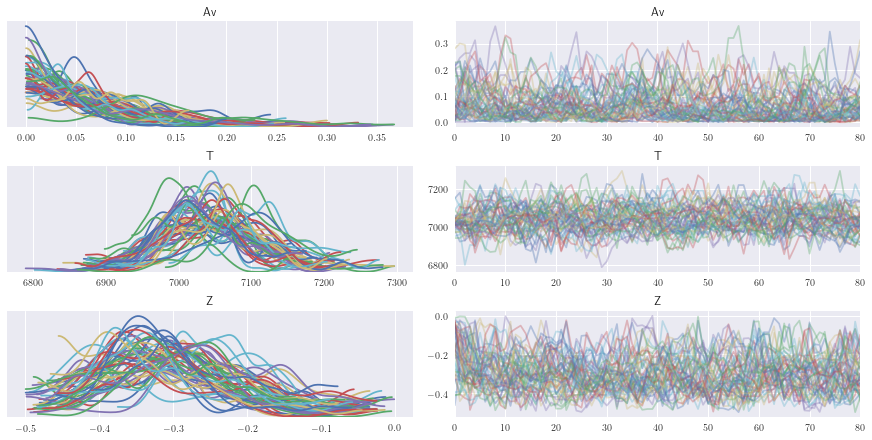

az.plot_trace(full_data);

/Users/miles/.pyenv/versions/3.7.4/Python.framework/Versions/3.7/lib/python3.7/site-packages/arviz/plots/backends/matplotlib/distplot.py:38: UserWarning: Argument backend_kwargs has not effect in matplotlib.plot_distSupplied value won't be used

"Argument backend_kwargs has not effect in matplotlib.plot_dist"

/Users/miles/.pyenv/versions/3.7.4/Python.framework/Versions/3.7/lib/python3.7/site-packages/arviz/plots/backends/matplotlib/distplot.py:38: UserWarning: Argument backend_kwargs has not effect in matplotlib.plot_distSupplied value won't be used

"Argument backend_kwargs has not effect in matplotlib.plot_dist"

/Users/miles/.pyenv/versions/3.7.4/Python.framework/Versions/3.7/lib/python3.7/site-packages/arviz/plots/backends/matplotlib/distplot.py:38: UserWarning: Argument backend_kwargs has not effect in matplotlib.plot_distSupplied value won't be used

"Argument backend_kwargs has not effect in matplotlib.plot_dist"

After seeing our full traces, let’s discard and thin some of the burn-in

[26]:

tau = reader.get_autocorr_time(tol=0)

burnin = int(tau.max())

thin = int(0.3 * np.min(tau))

burn_samples = reader.get_chain(discard=burnin, thin=thin)

log_prob_samples = reader.get_log_prob(discard=burnin, thin=thin)

log_prior_samples = reader.get_blobs(discard=burnin, thin=thin)

dd = dict(zip(model.labels, burn_samples.T))

burn_data = az.from_dict(dd)

[27]:

az.plot_trace(burn_data);

/Users/miles/.pyenv/versions/3.7.4/Python.framework/Versions/3.7/lib/python3.7/site-packages/arviz/plots/backends/matplotlib/distplot.py:38: UserWarning: Argument backend_kwargs has not effect in matplotlib.plot_distSupplied value won't be used

"Argument backend_kwargs has not effect in matplotlib.plot_dist"

/Users/miles/.pyenv/versions/3.7.4/Python.framework/Versions/3.7/lib/python3.7/site-packages/arviz/plots/backends/matplotlib/distplot.py:38: UserWarning: Argument backend_kwargs has not effect in matplotlib.plot_distSupplied value won't be used

"Argument backend_kwargs has not effect in matplotlib.plot_dist"

/Users/miles/.pyenv/versions/3.7.4/Python.framework/Versions/3.7/lib/python3.7/site-packages/arviz/plots/backends/matplotlib/distplot.py:38: UserWarning: Argument backend_kwargs has not effect in matplotlib.plot_distSupplied value won't be used

"Argument backend_kwargs has not effect in matplotlib.plot_dist"

[28]:

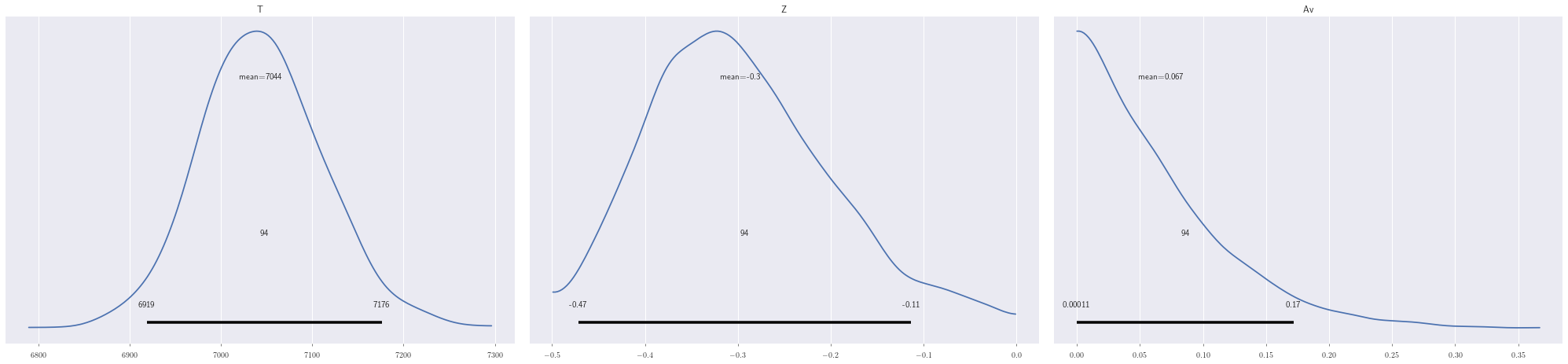

az.summary(burn_data)

[28]:

| mean | sd | hpd_3% | hpd_97% | mcse_mean | mcse_sd | ess_mean | ess_sd | ess_bulk | ess_tail | r_hat | |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Av | 0.067 | 0.058 | 0.000 | 0.172 | 0.003 | 0.002 | 415.0 | 415.0 | 494.0 | 932.0 | 1.08 |

| T | 7043.581 | 68.071 | 6919.282 | 7176.220 | 3.812 | 2.701 | 319.0 | 318.0 | 322.0 | 1564.0 | 1.12 |

| Z | -0.297 | 0.097 | -0.472 | -0.113 | 0.005 | 0.003 | 434.0 | 434.0 | 440.0 | 1686.0 | 1.09 |

[30]:

az.plot_posterior(burn_data, ["T", "Z", "Av"]);

[31]:

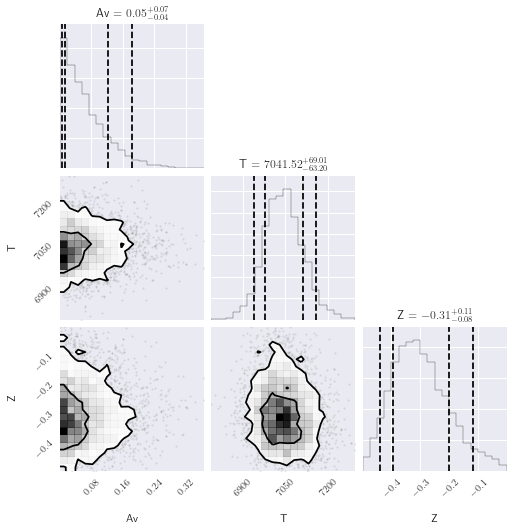

# See https://corner.readthedocs.io/en/latest/pages/sigmas.html#a-note-about-sigmas

sigmas = ((1 - np.exp(-0.5)), (1 - np.exp(-2)))

corner.corner(

burn_samples.reshape((-1, 3)),

labels=model.labels,

quantiles=(0.05, 0.16, 0.84, 0.95),

levels=sigmas,

show_titles=True,

);

After looking at our posteriors, let’s look at our fit

[32]:

best_fit = dict(az.summary(burn_data)["mean"])

model.set_param_dict(best_fit)

model

[32]:

SpectrumModel

-------------

Data: Example Spectrum

Emulator: F_SPEX_emu

Log Likelihood: None

Parameters

Av: 0.067

T: 7043.581

Z: -0.297

Frozen Parameters

logg: 4.2

global_cov:log_amp: 38.8334286761706

global_cov:log_ls: 4.1447983961624875

[34]:

model.plot();

and finally, we can save our best fit.

[35]:

model.save("example_sampled.toml")

Now, on to the next star!